Upgrade OpenMetadata

In this guide, you will find all the necessary information to safely upgrade your OpenMetadata instance to 1.9.x.

Prerequisites

Everytime that you plan on upgrading OpenMetadata to a newer version, make sure to go over all these steps:

Backup your Metadata

Before upgrading your OpenMetadata version we strongly recommend backing up the metadata.

The source of truth is stored in the underlying database (MySQL and Postgres supported). During each version upgrade there is a database migration process that needs to run. It will directly attack your database and update the shape of the data to the newest OpenMetadata release.

It is important that we backup the data because if we face any unexpected issues during the upgrade process, you will be able to get back to the previous version without any loss.

You can learn more about how the migration process works here.

During the upgrade, please note that the backup is only for safety and should not be used to restore data to a higher version.

Since version 1.4.0, OpenMetadata encourages using the builtin-tools for creating logical backups of the metadata:

For PROD deployment we recommend users to rely on cloud services for their databases, be it AWS RDS, Azure SQL or GCP Cloud SQL.

If you're a user of these services, you can leverage their backup capabilities directly:

You can refer to the following guide to get more details about the backup and restore:

Understanding the "Running" State in OpenMetadata

In OpenMetadata, the "Running" state indicates that the OpenMetadata server has received a response from Airflow confirming that a workflow is in progress. However, if Airflow unexpectedly stops or crashes before it can send a failure status update through the Failure Callback, OpenMetadata remains unaware of the workflow’s actual state. As a result, the workflow may appear to be stuck in "Running" even though it is no longer executing.

This situation can also occur during an OpenMetadata upgrade. If an ingestion pipeline was running at the time of the upgrade and the process caused Airflow to shut down, OpenMetadata would not receive any further updates from Airflow. Consequently, the pipeline status remains "Running" indefinitely.

Running State in OpenMetadata

Expected Steps to Resolve

To resolve this issue:

- Ensure that Airflow is restarted properly after an unexpected shutdown.

- Manually update the pipeline status if necessary.

- Check Airflow logs to verify if the DAG execution was interrupted.

Update sort_buffer_size (MySQL) or work_mem (Postgres)

Before running the migrations, it is important to update these parameters to ensure there are no runtime errors. A safe value would be setting them to 20MB.

If using MySQL

You can update it via SQL (note that it will reset after the server restarts):

To make the configuration persistent, you'd need to navigate to your MySQL Server install directory and update the my.ini or my.cnf files with sort_buffer_size = 20971520.

If using RDS, you will need to update your instance's Parameter Group to include the above change.

If using Postgres

You can update it via SQL (not that it will reset after the server restarts):

To make the configuration persistent, you'll need to update the postgresql.conf file with work_mem = 20MB.

If using RDS, you will need to update your instance's Parameter Group to include the above change.

Note that this value would depend on the size of your query_entity table. If you still see Out of Sort Memory Errors during the migration after bumping this value, you can increase them further.

After the migration is finished, you can revert this changes.

Backward Incompatible Changes

1.9.0

Strong validation of test case parameters

parameterValues name of a testCase will be strongly validated against the name of the parameterDefinition in the testDefinition. If both parameter names do not match an error will be thrown on testCase creation

Multi-domain Support

All entities now support multi-domains. Their domain field is now renamed to domains and modelled as a list of domains instead of a single domain.

If you're using the API or the SDK, you will need to update your code to use the new domains field instead of domain. We have also updated the patch_domain implementation, which now has a new signature to support the new domains field.

While the schema and APIs are all updated, the Multi-domain support is not enabled by default.

If you want to allow your assets to belong to multiple domains, you need to go to Settings > Preferences > Data Asset Rules and disable the Multiple Domains are not allowed rule.

Upgrade your installation

Once your metadata is safe, follow the required upgrade instructions based on your environment:

Upgrade your Kubernetes installation

Upgrade a Docker DeploymentUpgrade your Docker installation

Upgrade a Bare Metal DeploymentUpgrade your Bare Metal installation

Post-Upgrade Steps

Reindex

With UI

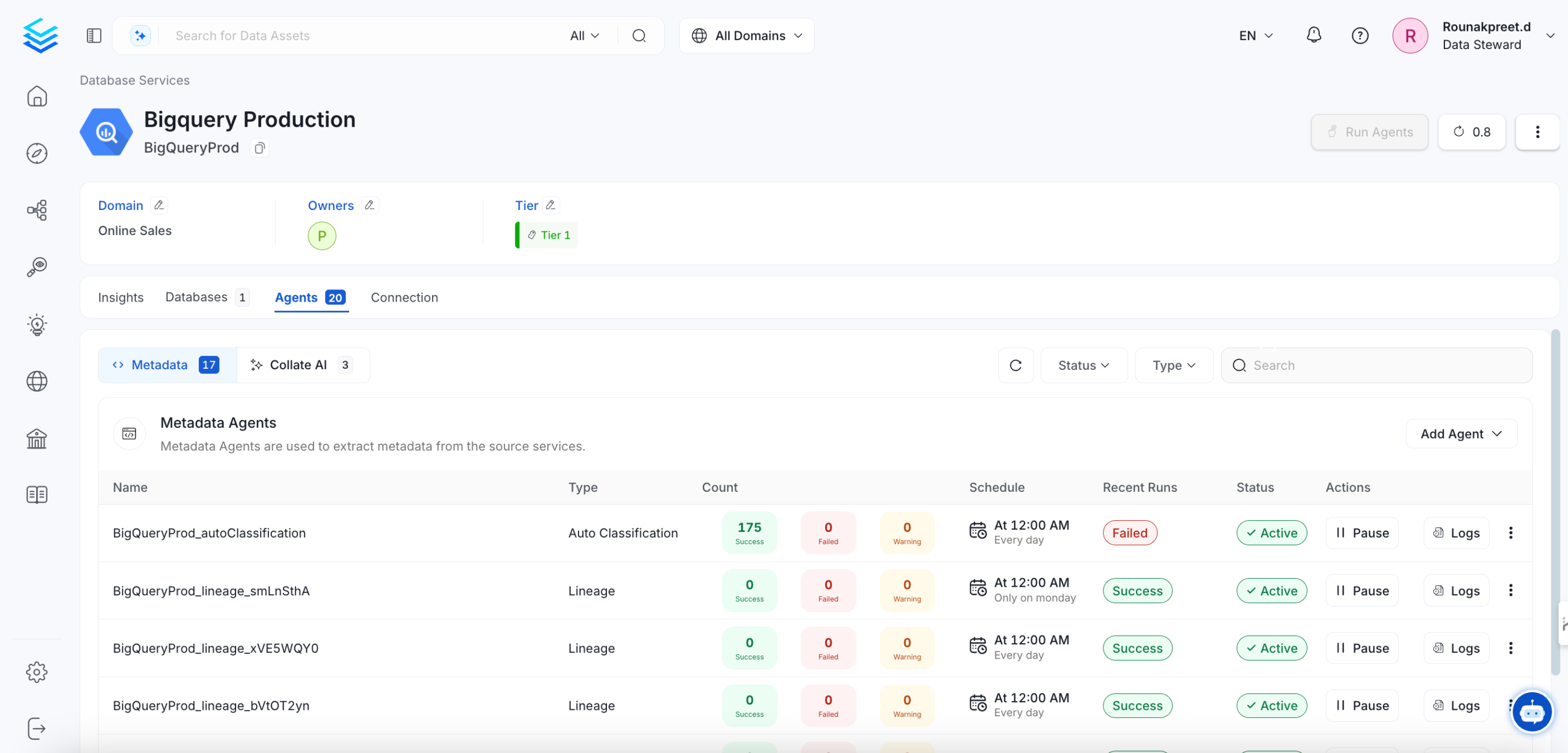

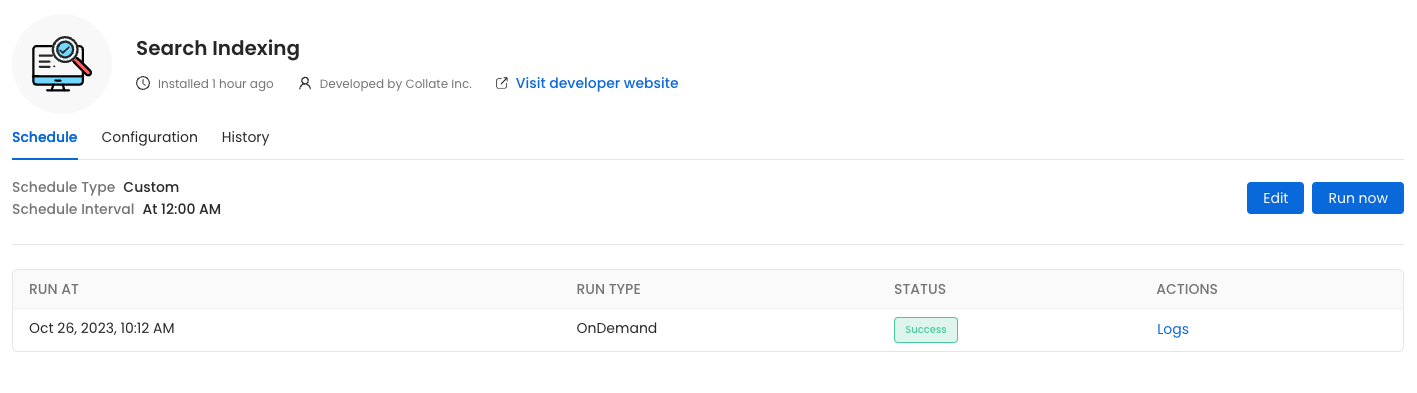

Go to Settings -> Applications -> Search Indexing

Reindex

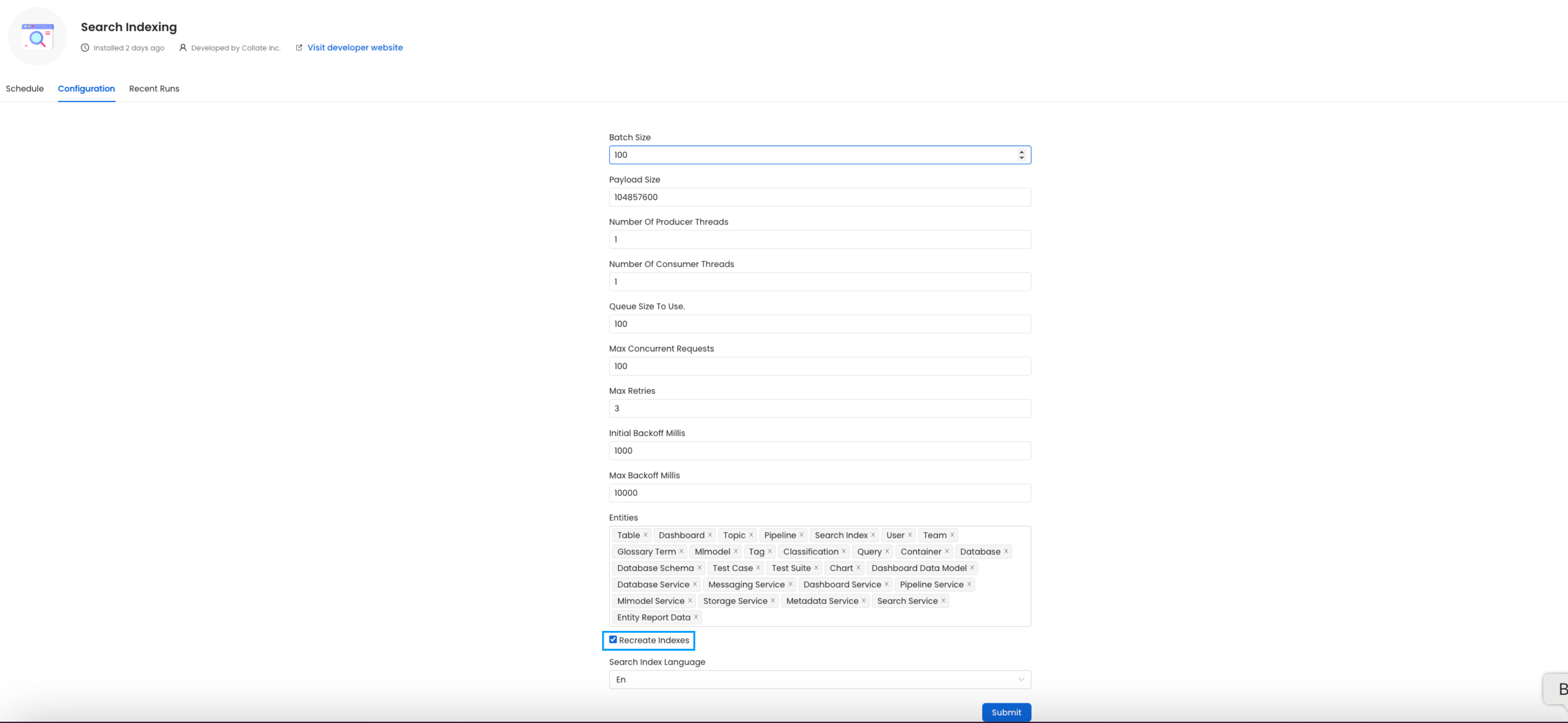

Before initiating the process by clicking Run Now, ensure that the Recreate Indexes option is enabled to allow rebuilding the indexes as needed.

In the configuration section, you can select the entities you want to reindex.

Reindex

Since this is required after the upgrade, we want to reindex All the entities.

(Optional) Update your OpenMetadata Ingestion Client

If you are running the ingestion workflows externally or using a custom Airflow installation, you need to make sure that the Python Client you use is aligned with the OpenMetadata server version.

For example, if you are upgrading the server to the version x.y.z, you will need to update your client with

With Kubernetes

Follow these steps to reindex using the CLI:

- List the CronJobs Use the following command to check the available CronJobs:

Upon running this command you should see output similar to the following.

- Create a Job from a CronJob Create a one-time job from an existing CronJob using the following command:

Replace <job_name> with the actual name of the job.

Upon running this command you should see output similar to the following.

- Check the Job Status Verify the status of the created job with:

Upon running this command you should see output similar to the following.

- view logs To view the logs use the below command.

Replace <job_name> with the actual job name.

The plugin parameter is a list of the sources that we want to ingest. An example would look like this openmetadata-ingestion[mysql,snowflake,s3]==1.2.0. You will find specific instructions for each connector here.

Moreover, if working with your own Airflow deployment - not the openmetadata-ingestion image - you will need to upgrade as well the openmetadata-managed-apis version:

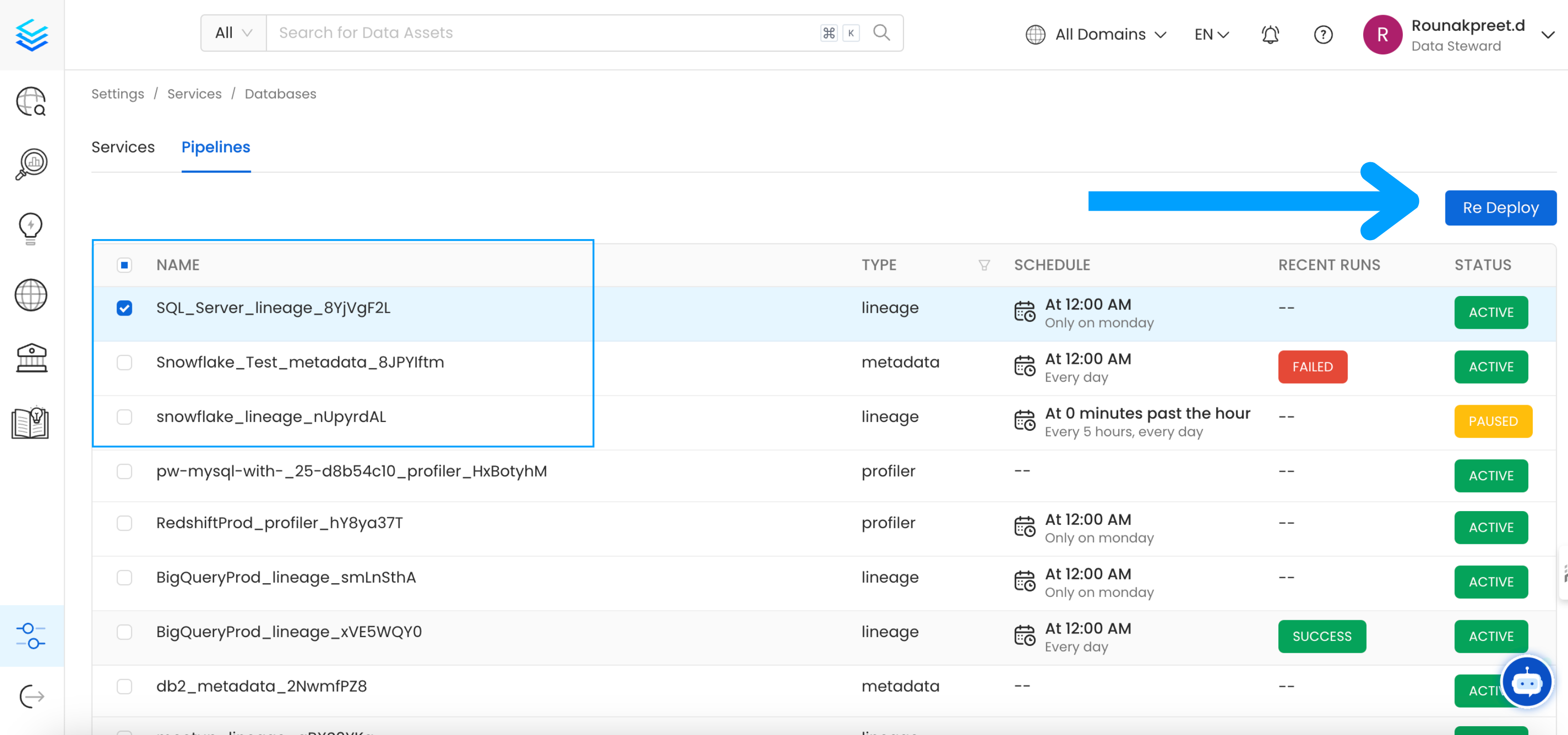

Re Deploy Ingestion Pipelines

With UI

Go to Settings -> {Services} -> {Databases} -> Pipelines

Re-deploy

Select the pipelines you want to Re Deploy click Re Deploy.

With Kubernetes

Follow these steps to deploy pipelines using the CLI:

- List the CronJobs Use the following command to check the available CronJobs:

Upon running this command you should see output similar to the following.

- Create a Job from a CronJob Create a one-time job from an existing CronJob using the following command:

Replace <job_name> with the actual name of the job.

Upon running this command you should see output similar to the following.

- Check the Job Status Verify the status of the created job with:

Upon running this command you should see output similar to the following.

- view logs To view the logs use the below command.

Replace <job_name> with the actual job name.

If you are seeing broken dags select all the pipelines from all the services and re deploy the pipelines.

Openmetadata-ops Script

Overview

The openmetadata-ops script is designed to manage and migrate databases and search indexes, reindex existing data into Elastic Search or OpenSearch, and redeploy service pipelines.

Usage

Commands

- analyze-tables

Migrates secrets from the database to the configured Secrets Manager. Note that this command does not support migrating between external Secrets Managers.

- changelog

Prints the change log of database migration.

- check-connection

Checks if a connection can be successfully obtained for the target database.

- deploy-pipelines

Deploys all the service pipelines.

- drop-create

Deletes any tables in the configured database and creates new tables based on the current version of OpenMetadata. This command also re-creates the search indexes.

- info

Shows the list of migrations applied and the pending migrations waiting to be applied on the target database.

- migrate

Migrates the OpenMetadata database schema and search index mappings.

- migrate-secrets

Migrates secrets from the database to the configured Secrets Manager. Note that this command does not support migrating between external Secrets Managers.

- reindex

Reindexes data into the search engine from the command line.

- repair

Repairs the DATABASE_CHANGE_LOG table, which is used to track all the migrations on the target database. This involves removing entries for the failed migrations and updating the checksum of migrations already applied on the target database.

- validate

Checks if all the migrations have been applied on the target database.

Examples

Display Help To display the help message:

Migrate Database Schema

To migrate the database schema and search index mappings:

Reindex Data

To reindex data into the search engine: