How to Write and Deploy No-Code Test Cases

OpenMetadata supports data quality tests at the table and column level on all of the supported database connectors. OpenMetadata supports both business-oriented tests as well as data engineering tests. The data engineering tests are more on the technical side to ascertain a sanity check on the data. It ensures that your data meets the technical definition of the data assets, like the columns are not null, columns are unique, etc.

There is no need to fill a YAML file or a JSON config file to set up data quality tests in OpenMetadata. You can simply select the options and add in the details right from the UI to set up test cases.

To create a test in OpenMetadata:

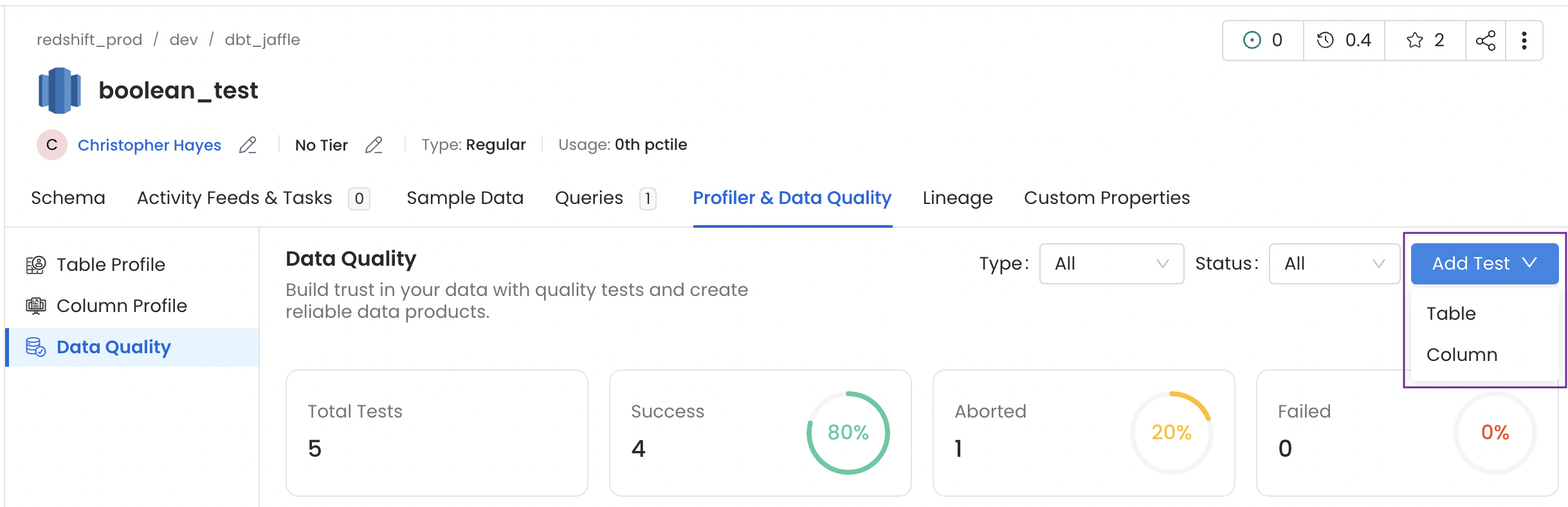

- Navigate to the table you would like to create a test for. Click on the Profiler & Data Quality tab.

- Click on Add Test to select a

TableorColumnlevel test.

Write and Deploy No-Code Test Cases

Table Level Test

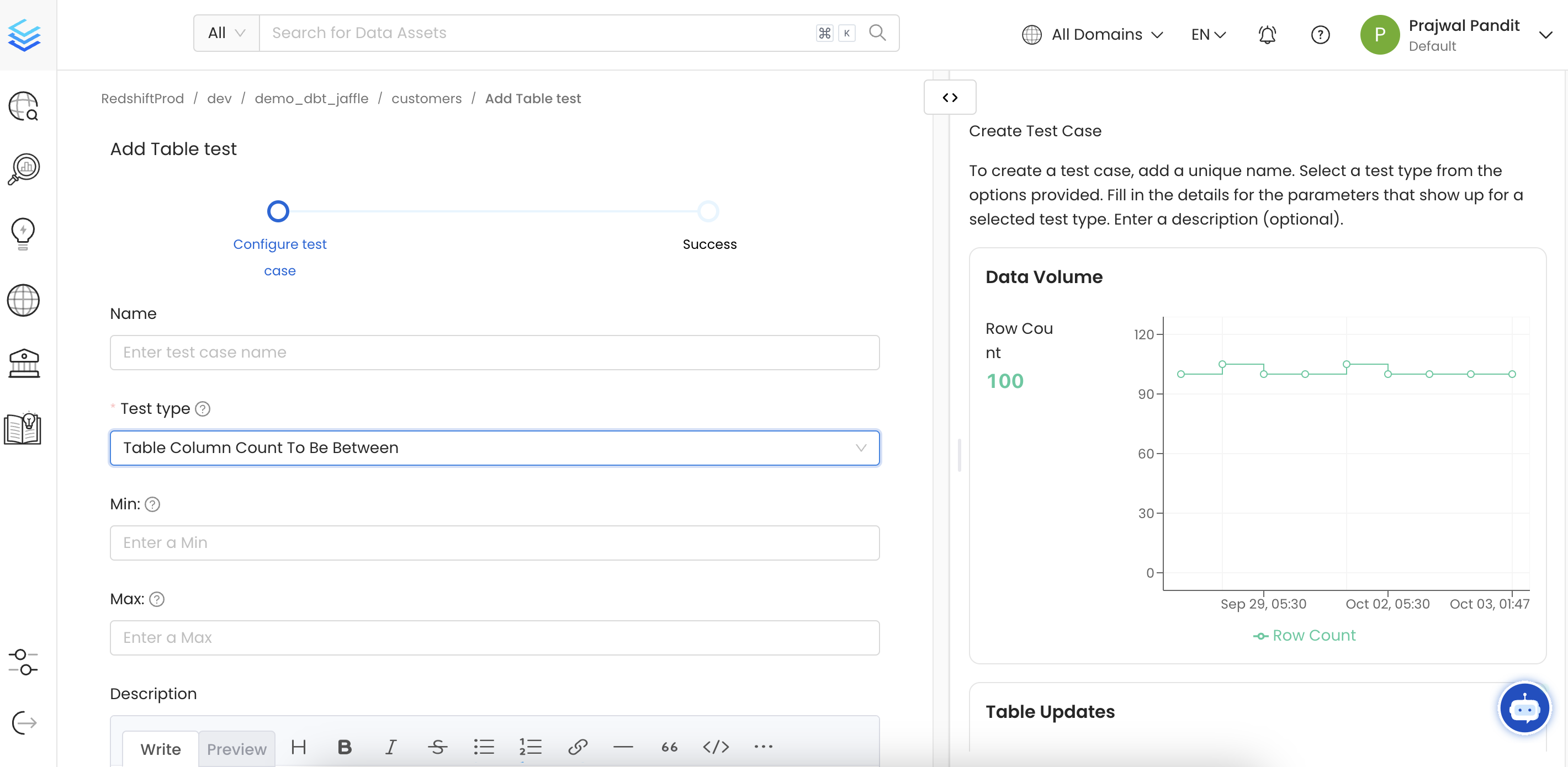

To create a Table Level Test enter the following details:

- Name: Add a name that best defines your test case.

- Test Type: Based on the test type, you will have further fields to define your test.

- Description: Describe the test case. Click on Submit to set up a test.

OpenMetadata currently supports the following table level test types:

- Table Column Count to be Between: Define the Min. and Max.

- Table Column Count to Equal: Define a number.

- Table Column Name to Exist: Define a column name.

- Table Column Names to Match Set: Add comma separated column names to match. You can also verify if the column names are in order.

- Custom SQL Query: Define a SQL expression. Select a strategy if it should apply for Rows or for Count. Define a threshold to determine if the test passes or fails.

- Table Row Count to be Between: Define the Min. and Max.

- Table Row Count to Equal: Define a number.

- Table Row Inserted Count to be Between: Define the Min. and Max. row count. This test will work for columns whose values are of the type Timestamp, Date, and Date Time field. Specify the range type in terms of Hour, Day, Month, or Year. Define the interval based on the range type selected.

- Compare 2 Tables for Differences: Compare 2 tables for differences. Allows a user to check for integrity.

- Table Data to Be Fresh: Validate the freshness of a table's data.

Configure a Table Level Test

Column Level Test

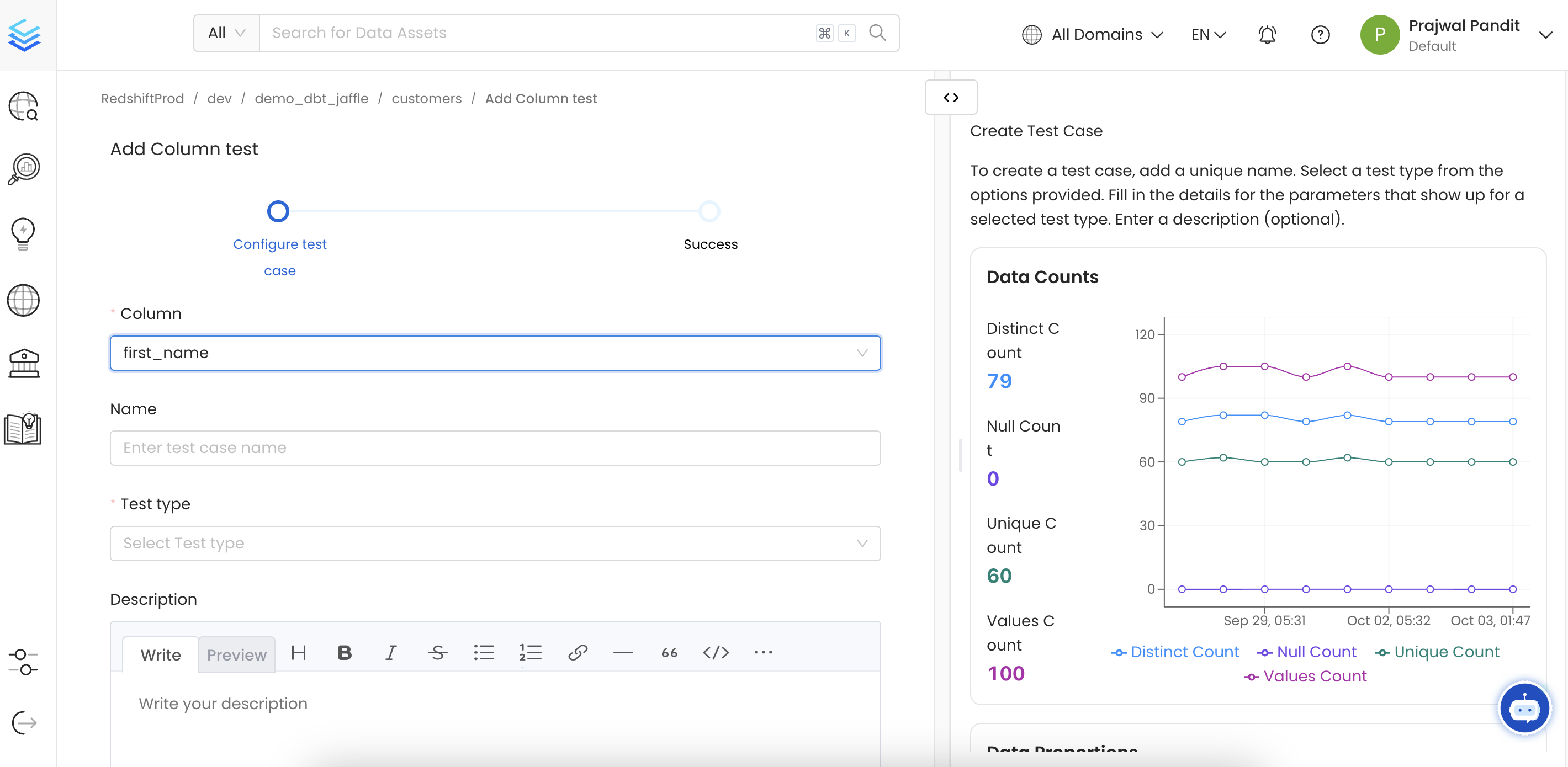

To create a Column Level Test enter the following details:

- Column: Select a column. On the right hand side, you can view some context about that column.

- Name: Add a name that best defines your test case.

- Test Type: Based on the test type, you will have further fields to define your test.

- Description: Describe the test case. Click on Submit to set up a test.

OpenMetadata currently supports the following column level test types:

- Column Value Lengths to be Between: Define the Min. and Max.

- Column Value Max. to be Between: Define the Min. and Max.

- Column Value Mean to be Between: Define the Min. and Max.

- Column Value Median to be Between: Define the Min. and Max.

- Column Value Min. to be Between: Define the Min. and Max.

- Column Values Missing Count: Define the number of missing values. You can also match all null and empty values as missing. You can also configure additional missing strings like N/A.

- Column Values Sum to be Between: Define the Min. and Max.

- Column Value Std Dev to be Between: Define the Min. and Max.

- Column Values to be Between: Define the Min. and Max.

- Column Values to be in Set: You can add an array of allowed values.

- Column Values to be Not in Set: You can add an array of forbidden values.

- Column Values to be Not Null

- Column Values to be Unique

- Column Values to Match Regex Pattern: Define the regular expression that the column entries should match.

- Column Values to Not Match Regex: Define the regular expression that the column entries should not match.

Configure a Column Level Test

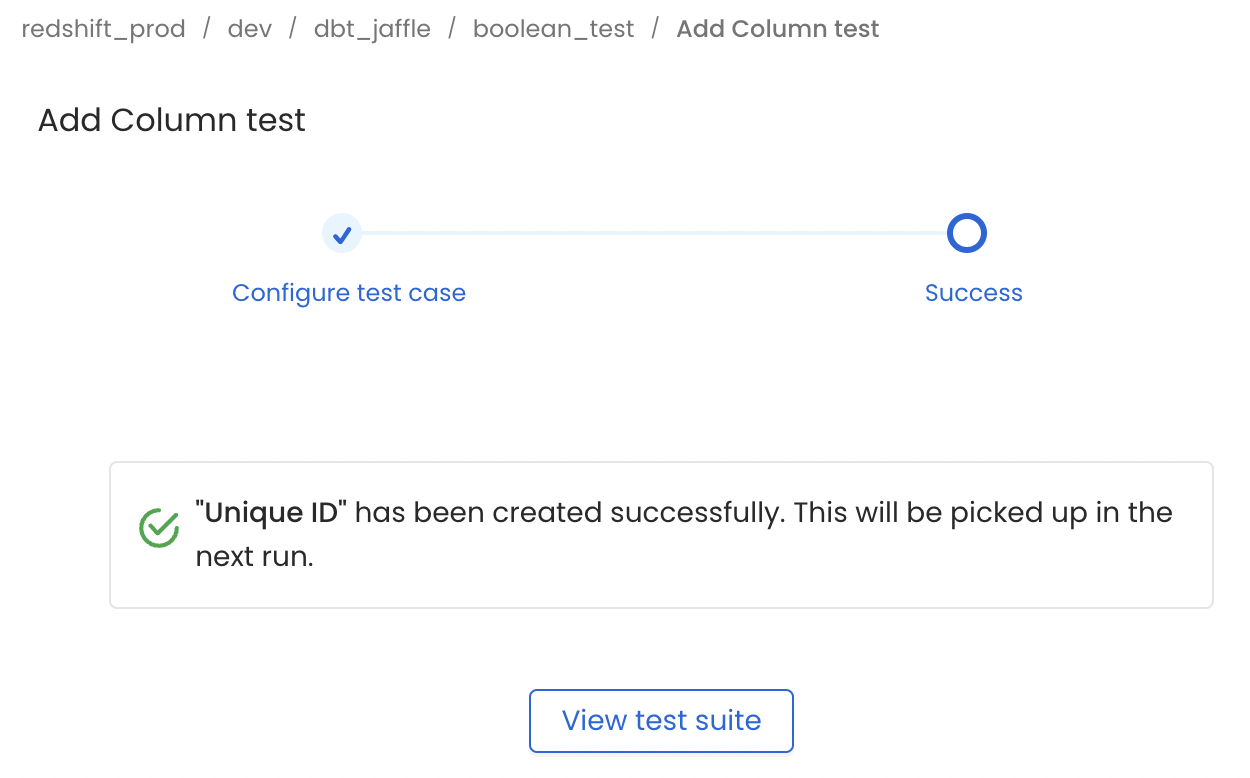

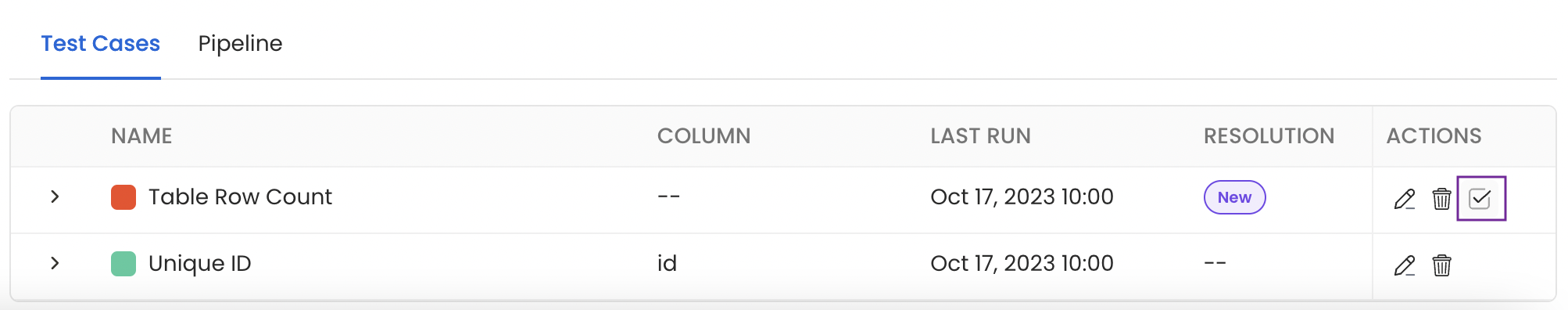

Once the test has been created, you can view the test suite. The test case will be displayed in the Data Quality tab. You can also edit the Display Name and Description for the test.

Column Level Test Created

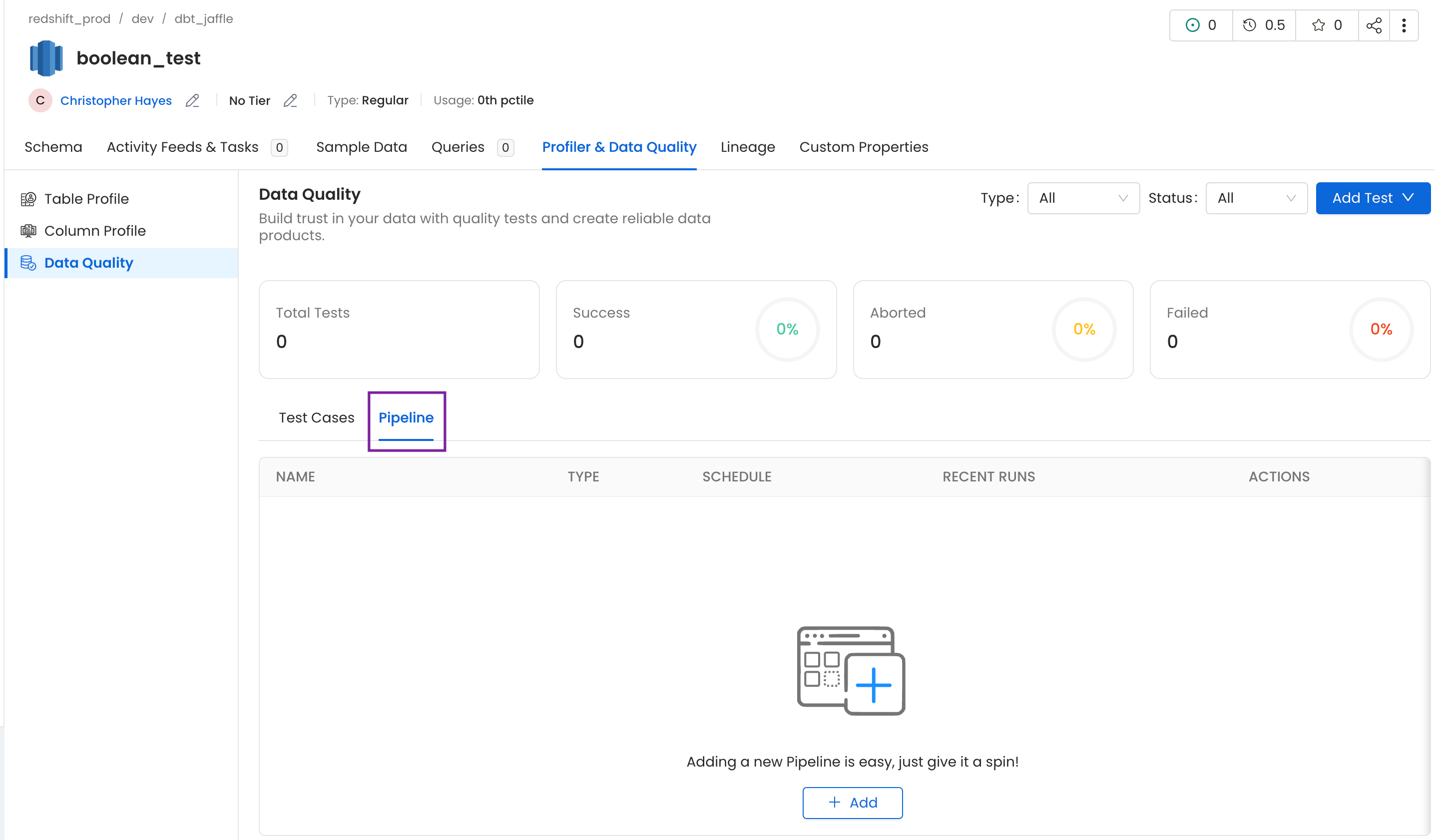

A pipeline can be set up for the tests to run at a regular cadence.

- Click on the

Pipelinetab - Add a pipeline

Set up a Pipeline

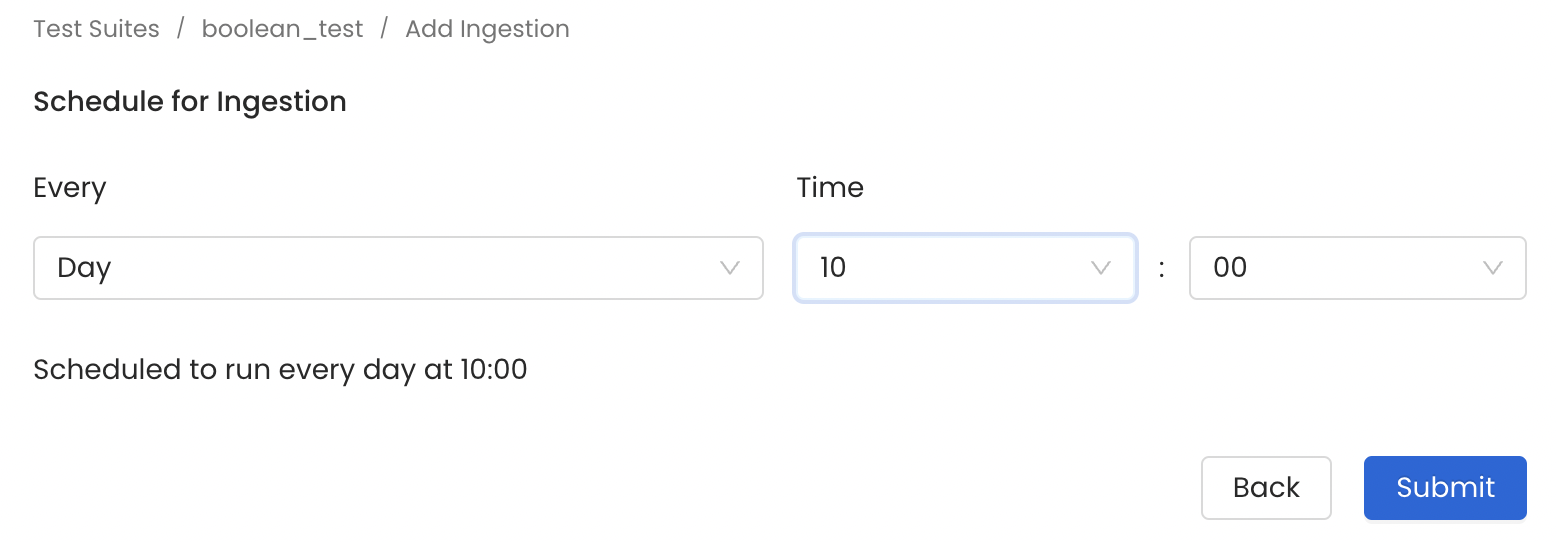

- Set up the scheduler for the desired frequency. The timezone is in UTC.

- Click on Submit.

Schedule the Pipeline

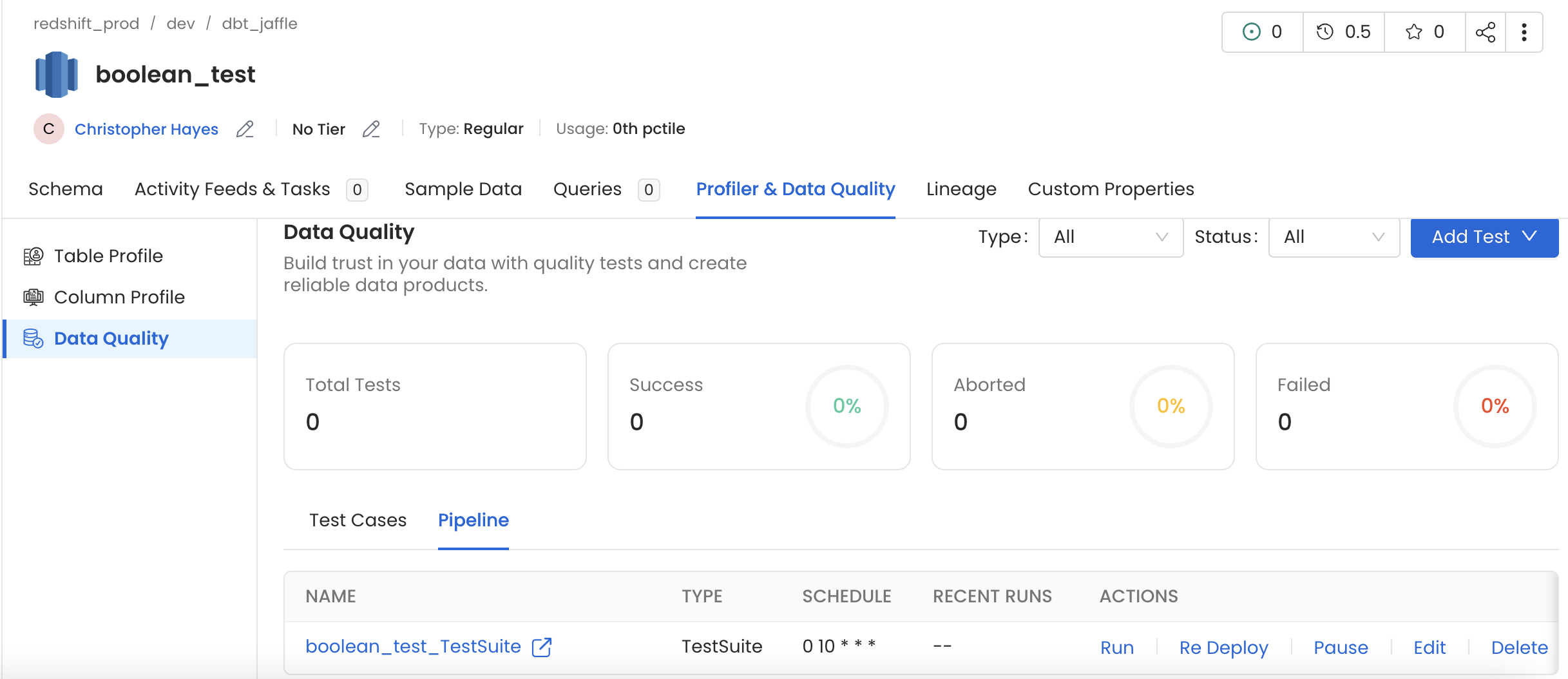

The pipeline has been set up and will run at the scheduled time.

Pipeline Scheduled

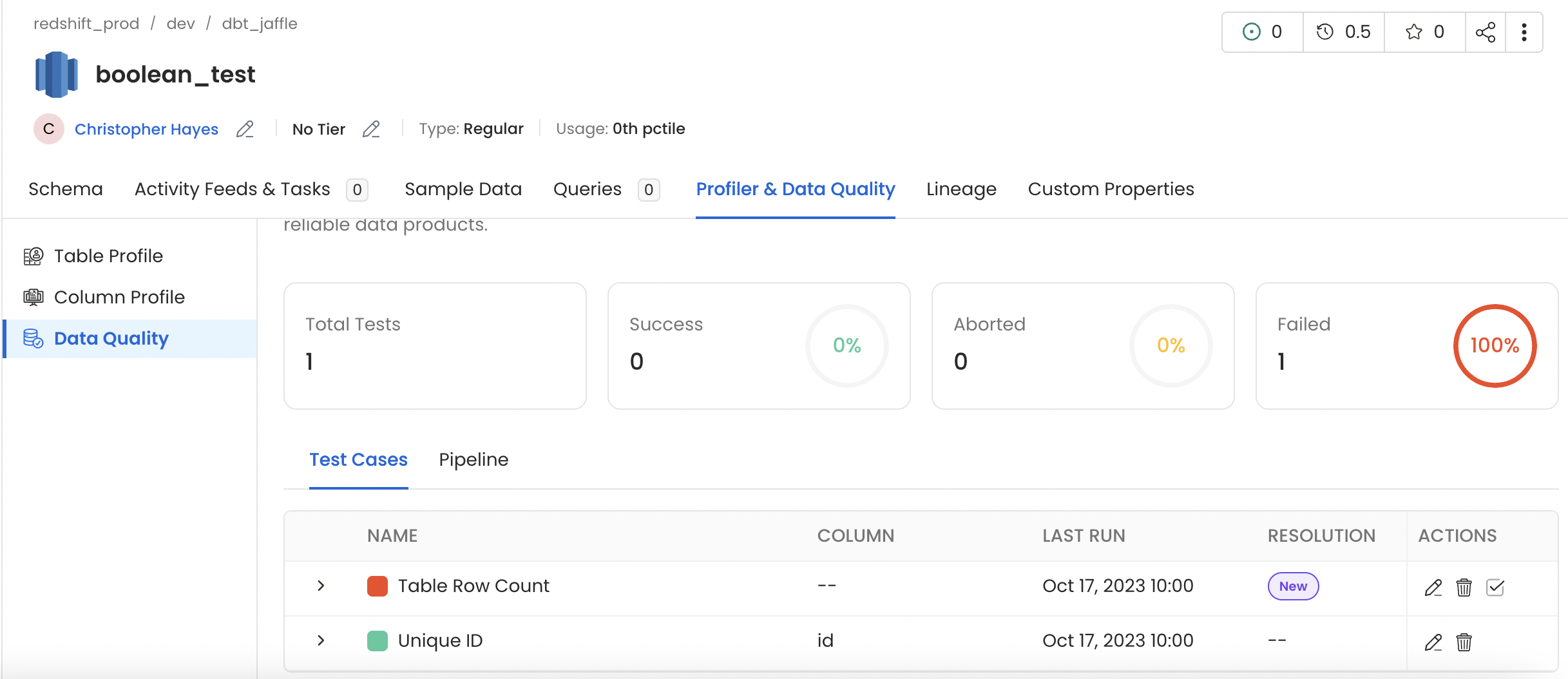

The tests will be run and the results will be updated in the Data Quality tab.

Data Quality Tests

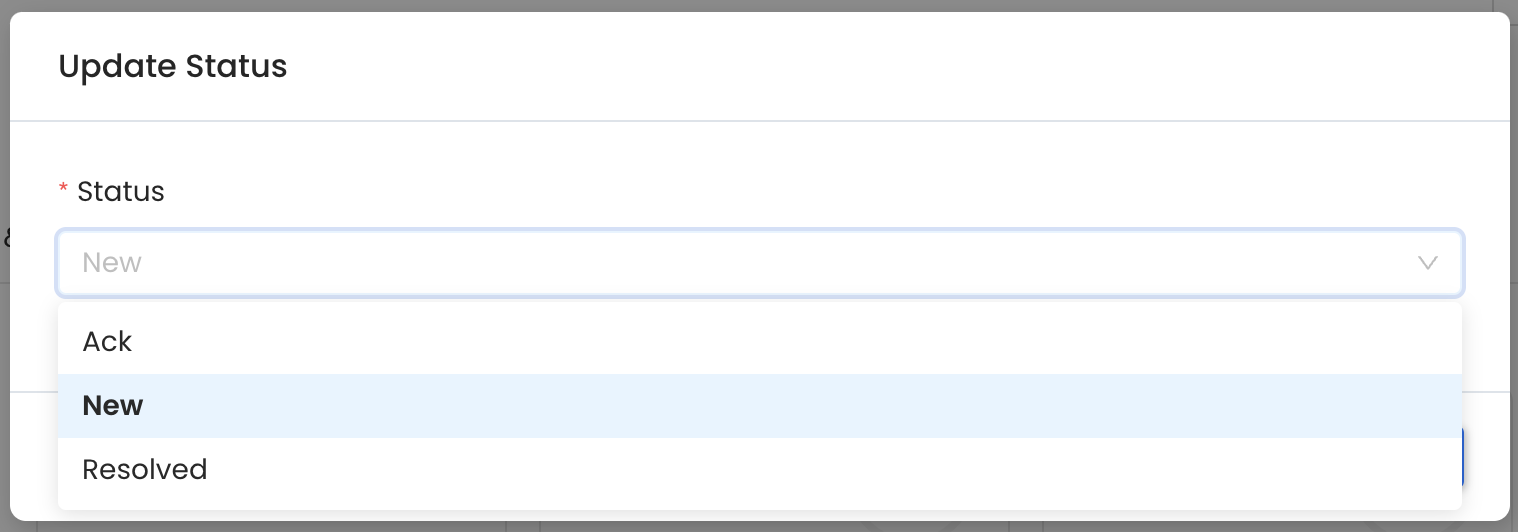

If a test fails, you can Edit the Test Status to New, Acknowledged, or Resolved status by clicking on the Status icon.

Failed Test: Edit Status

- Select the Test Status

Edit Test Status

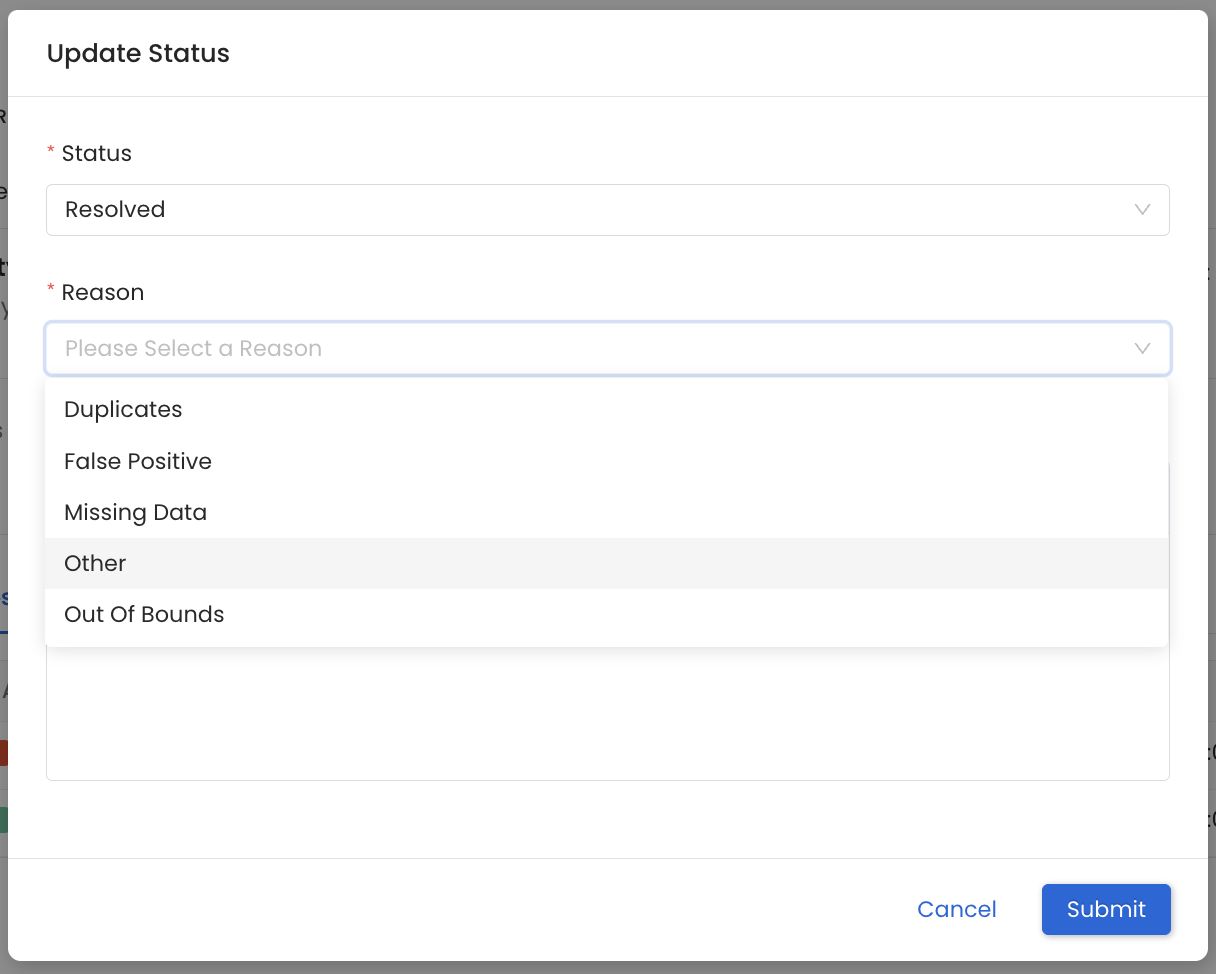

- If you are marking the test status as Resolved, you must specify the Reason for the failure and add a Comment. The reasons for failure can be Duplicates, False Positive, Missing Data, Other, or Out of Bounds.

- Click on Submit.

Resolved Status: Reason

Users can also set up alerts to be notified when a test fails.

How to Set Alerts for Test Case FailsGet notified when a data quality test fails.