Spark Lineage Ingestion

A spark job may involve movement/transfer of data which may result into a data lineage, to capture such lineages you can make use of OpenMetadata Spark Agent which you can configure with your spark session and capture these spark lineages into your OpenMetadata instance.

In this guide we will explain how you can make use of the OpenMetadata Spark Agent to capture such lineage.

Requirement

To use the OpenMetadata Spark Agent, you will have to download the latest jar from here.

We support spark version 3.1 and above.

Configuration

While configuring the spark session, in this guide we will make use of PySpark to demonstrate the use of OpenMetadata Spark Agent

Once you have downloaded the jar from here in your spark configuration you will have to add the path to your openmetadata-spark-agent.jar along with other required jars to run your spark job, in this example it is mysql-connector-java.jar

openmetadata-spark-agent.jar comes with a custom spark listener i.e. io.openlineage.spark.agent.OpenLineageSparkListener you will need to add this as extraListeners spark configuration.

spark.openmetadata.transport.hostPort: Specify the host & port of the instance where your OpenMetadata is hosted.

spark.openmetadata.transport.type is required configuration with value as openmetadata.

spark.openmetadata.transport.jwtToken: Specify your OpenMetadata Jwt token here. Checkout this documentation on how you can generate a jwt token in OpenMetadata.

spark.openmetadata.transport.pipelineServiceName: This spark job will be creating a new pipeline service of type Spark, use this configuration to customize the pipeline service name.

Note: If the pipeline service with the specified name already exists then we will be updating/using the same pipeline service.

spark.openmetadata.transport.pipelineName: This spark job will also create a new pipeline within the pipeline service defined above. Use this configuration to customize the name of pipeline.

Note: If the pipeline with the specified name already exists then we will be updating/using the same pipeline.

spark.openmetadata.transport.pipelineSourceUrl: You can use this configuration to provide additional context to your pipeline by specifying a url related to the pipeline.

spark.openmetadata.transport.pipelineDescription: Provide pipeline description using this spark configuration.

spark.openmetadata.transport.databaseServiceNames: Provide the comma separated list of database service names which contains the source tables used in this job. If you do not provide this configuration then we will be searching through all the services available in openmetadata.

spark.openmetadata.transport.timeout: Provide the timeout to communicate with OpenMetadata APIs.

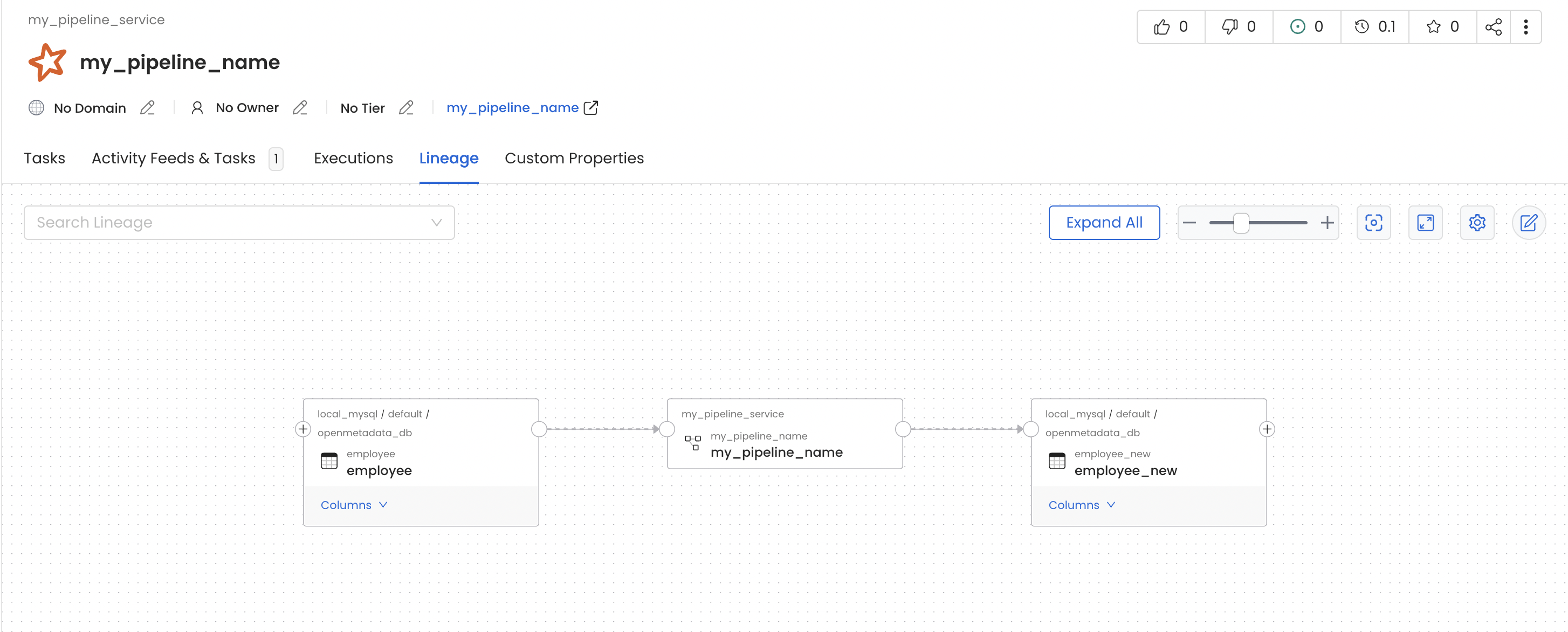

In this job we are reading data from employee table and moving it to another table employee_new of within same mysql source.

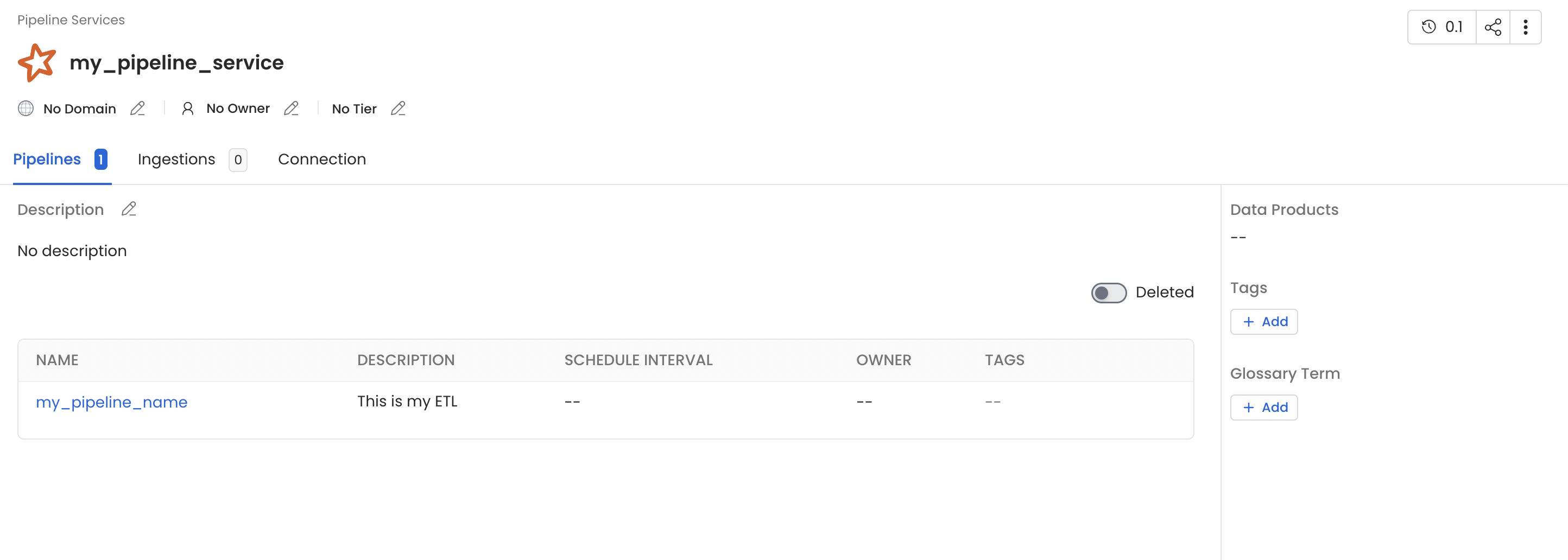

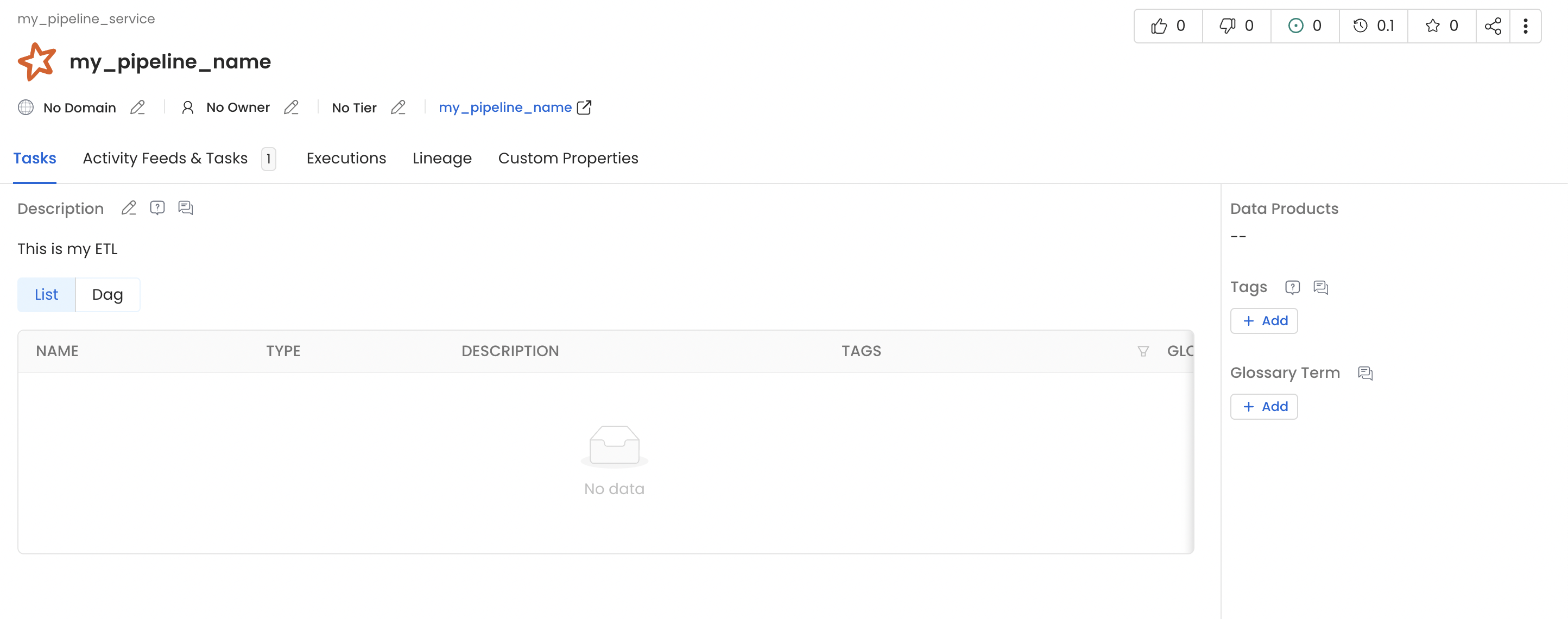

Once this pyspark job get finished you will see a new pipeline service with name my_pipeline_service generated in your openmetadata instance which would contain a pipeline with name my_pipeline as per the above example and you should also see lineage between the table employee and employee_new via my_pipeline.

Spark Pipeline Service

Spark Pipeline Details

Spark Pipeline Lineage

Using Spark Agent with Databricks

Follow the below steps in order to use OpenMetadata Spark Agent with databricks.

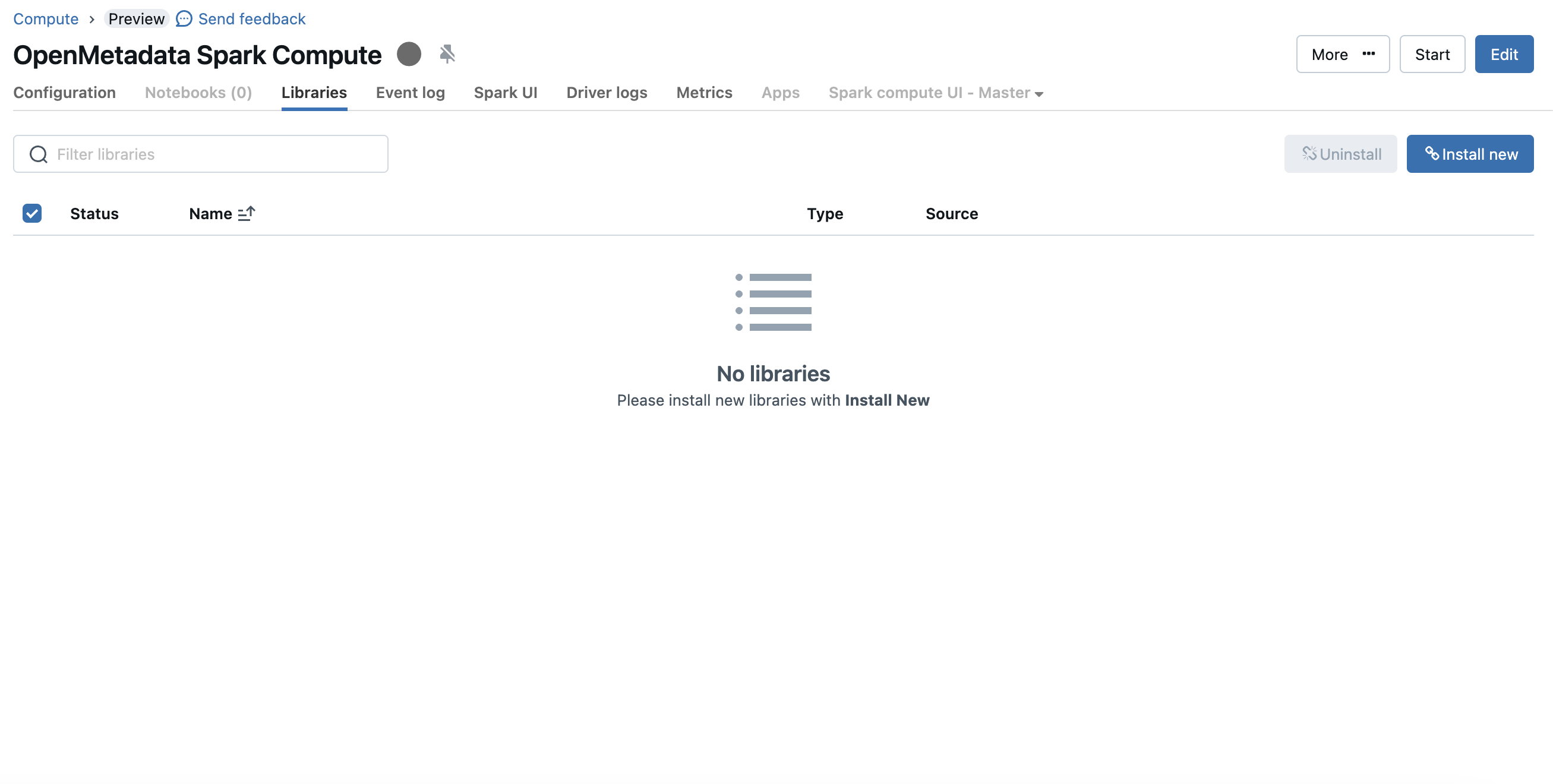

1. Upload the jar to compute cluster

To use the OpenMetadata Spark Agent, you will have to download the latest jar from here and upload it to your databricks compute cluster.

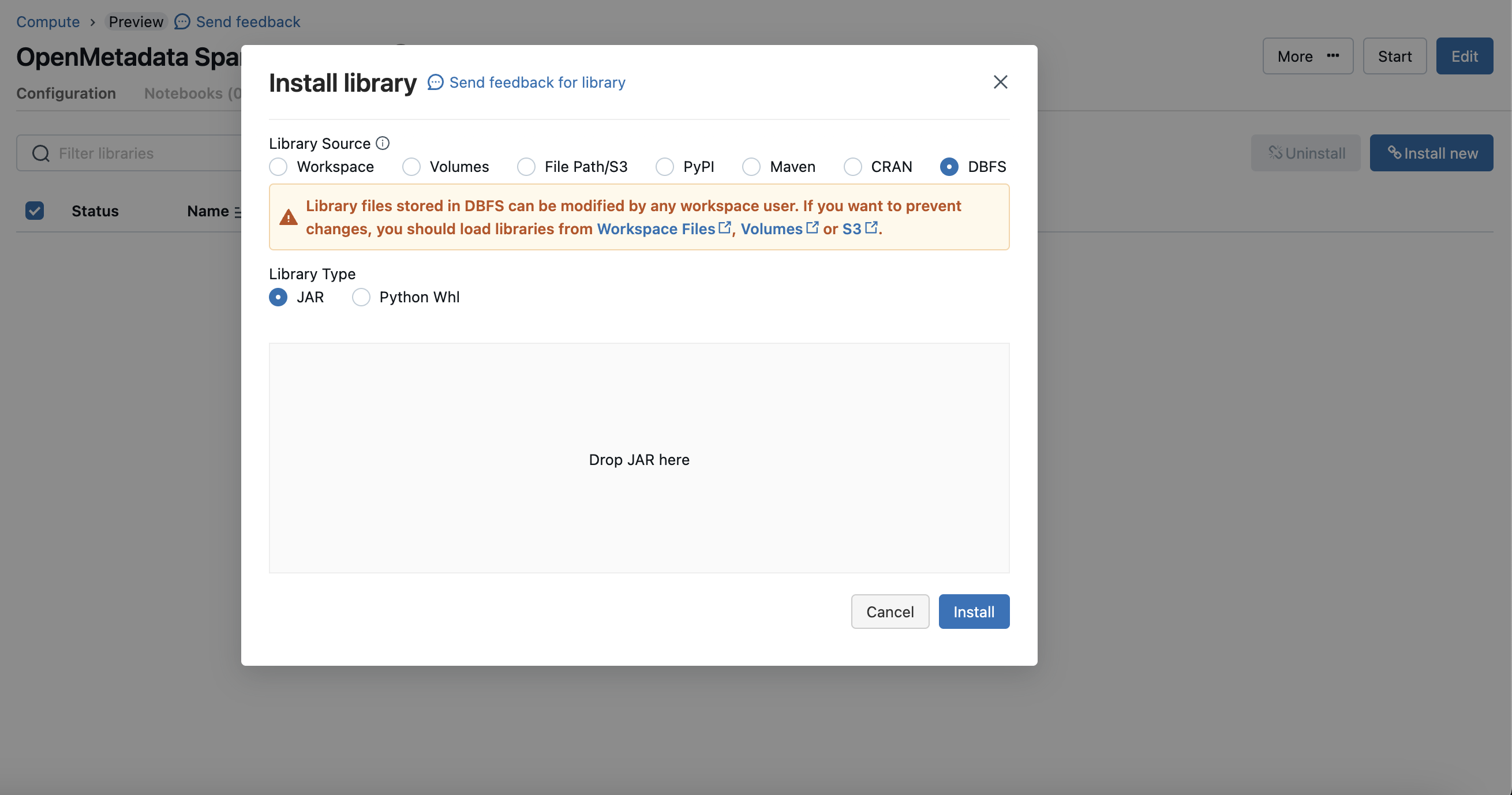

To upload the jar you can visit the compute details page and then go to the libraries tab

Spark Upload Jar

Click on the "Install Now" button and choose dbfs mode and upload the OpenMetadata Spark Agent jar.

Spark Upload Jar

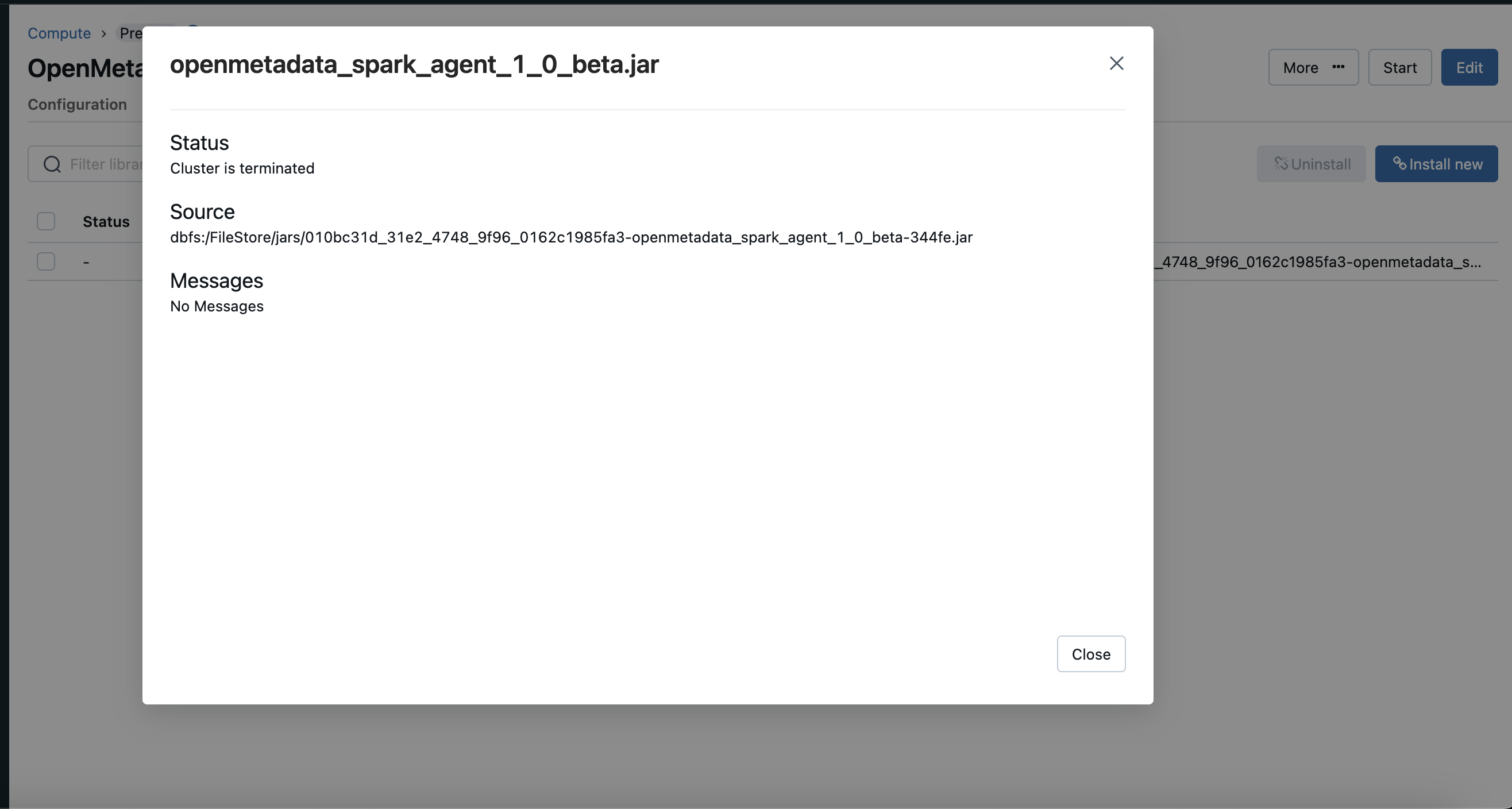

Once your jar is uploaded copy the path of the jar for the next steps.

Spark Upload Jar

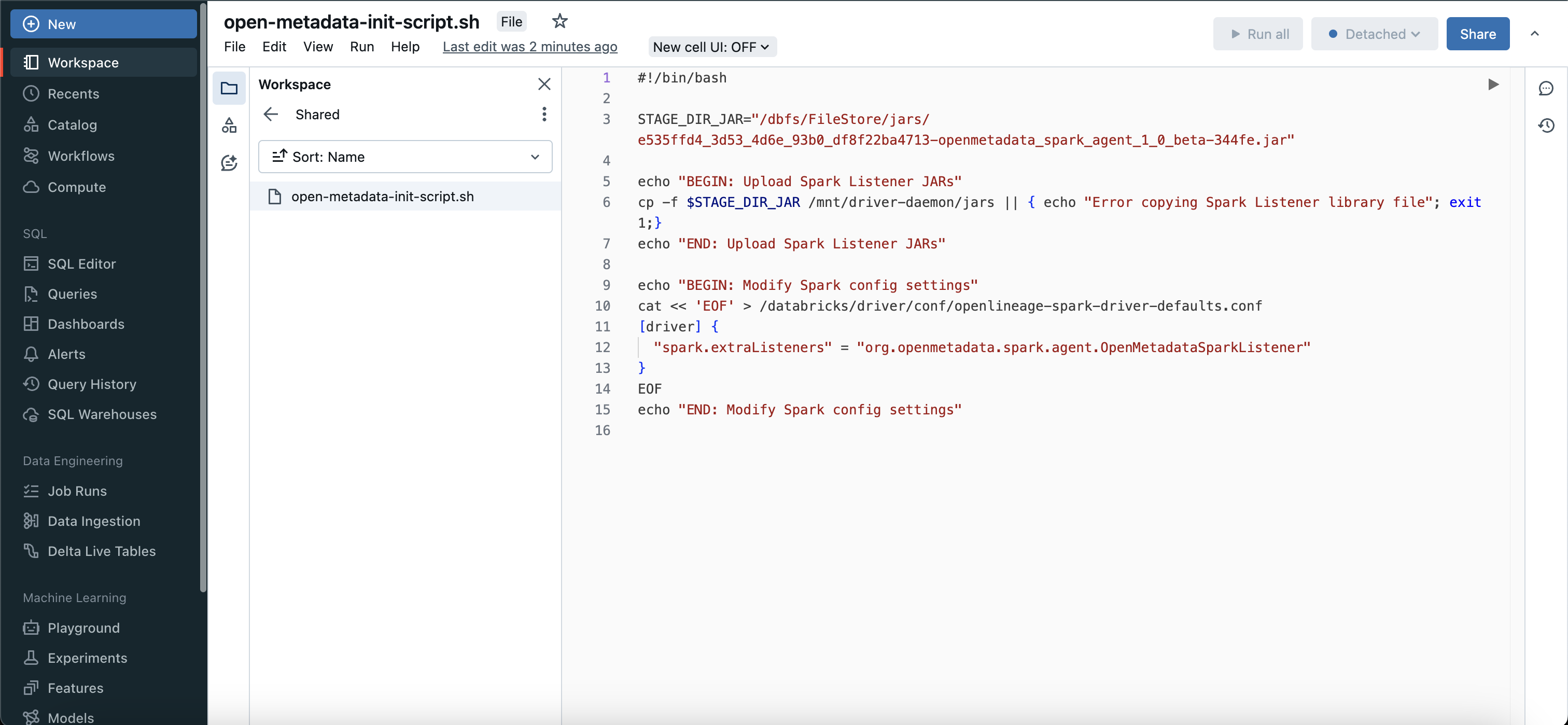

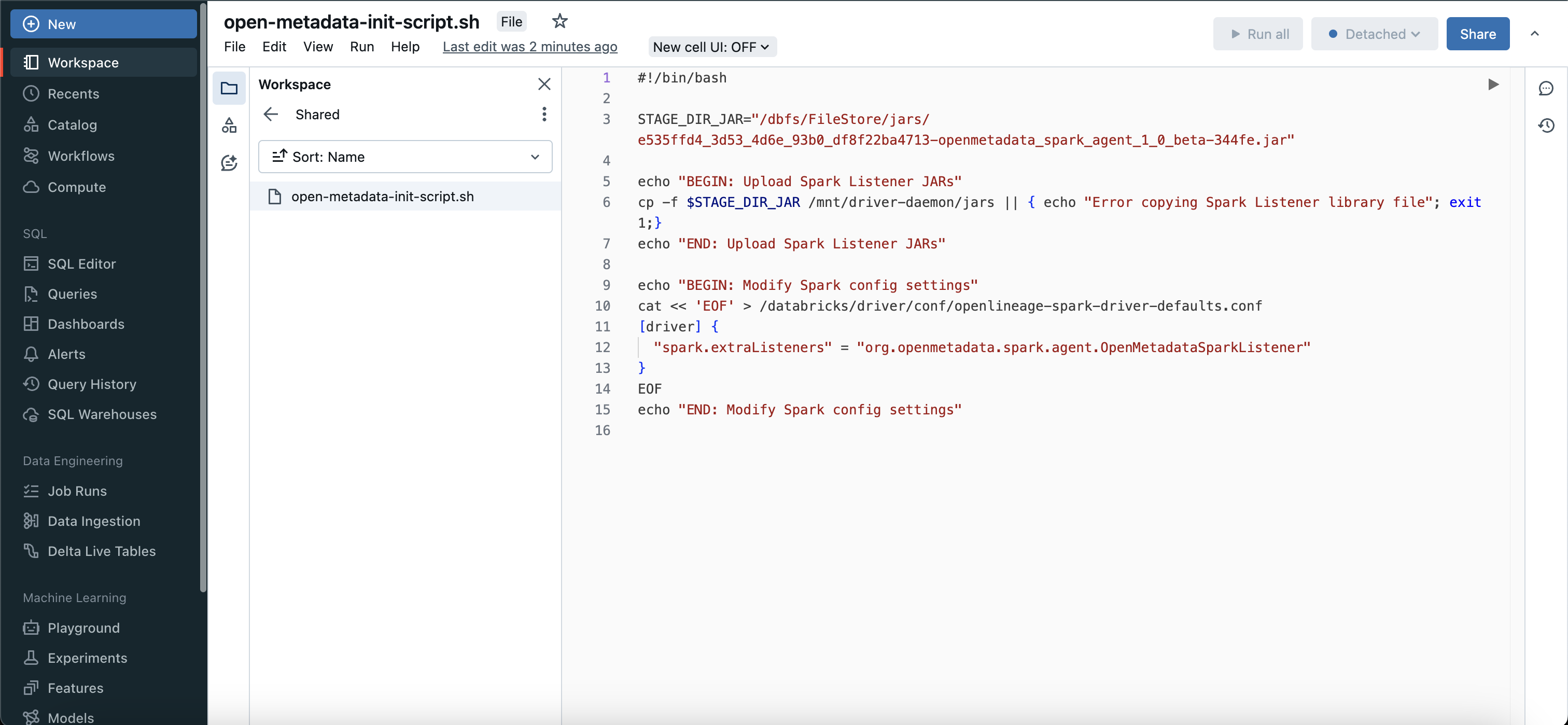

2. Create Initialization Script

Once your jar is uploaded you need to create a initialization script in your workspace.

Note: The copied path would look like this dbfs:/FileStore/jars/.... you need to modify it like /dbfs/FileStore/jars/... this.

Prepare Script

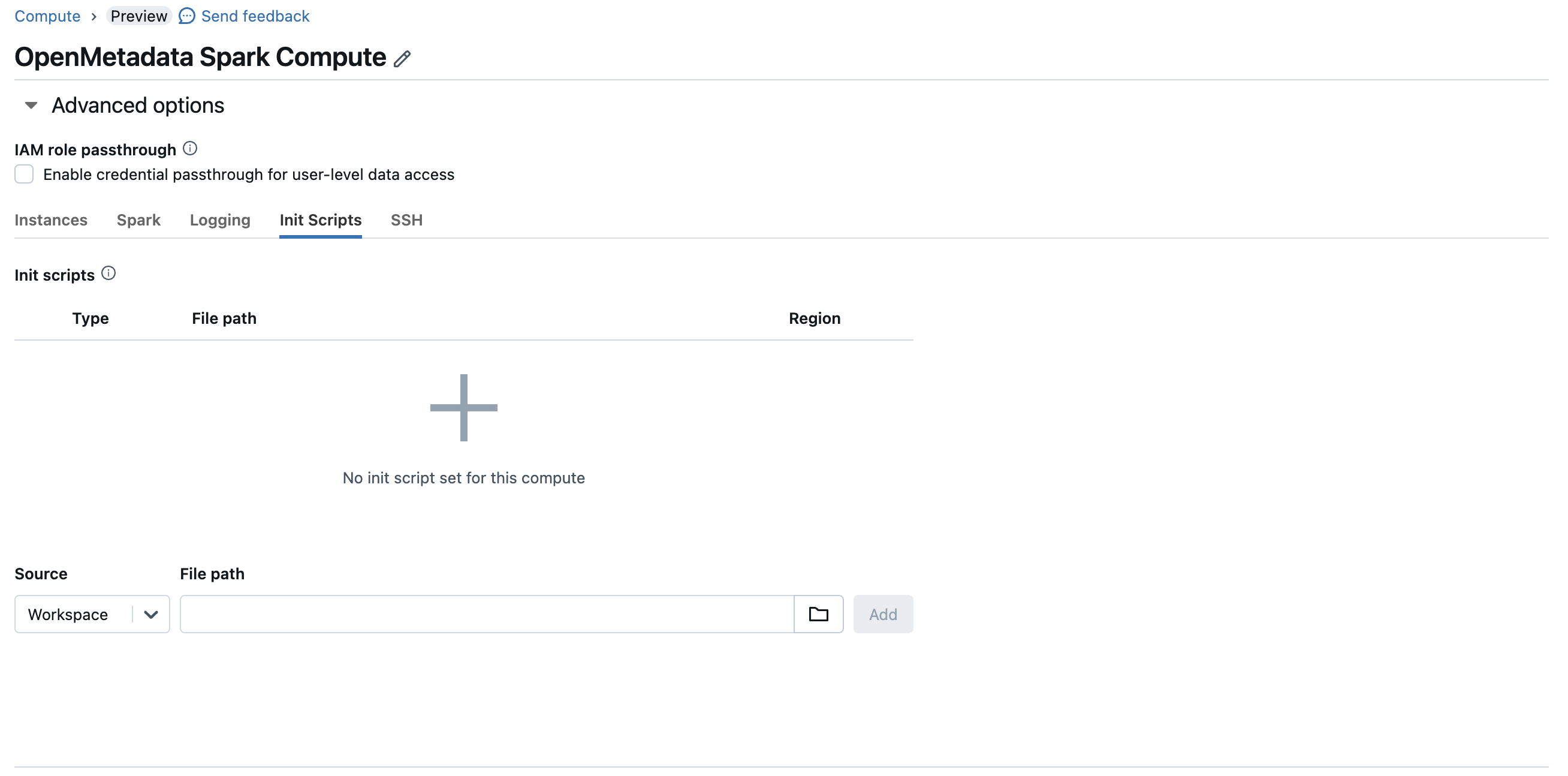

3. Configure Initialization Script

Once you have created a initialization script, you will need to attach this script to your compute instance, to do that you can go to advanced config > init scripts and add your script path.

Prepare Script

Spark Init Script

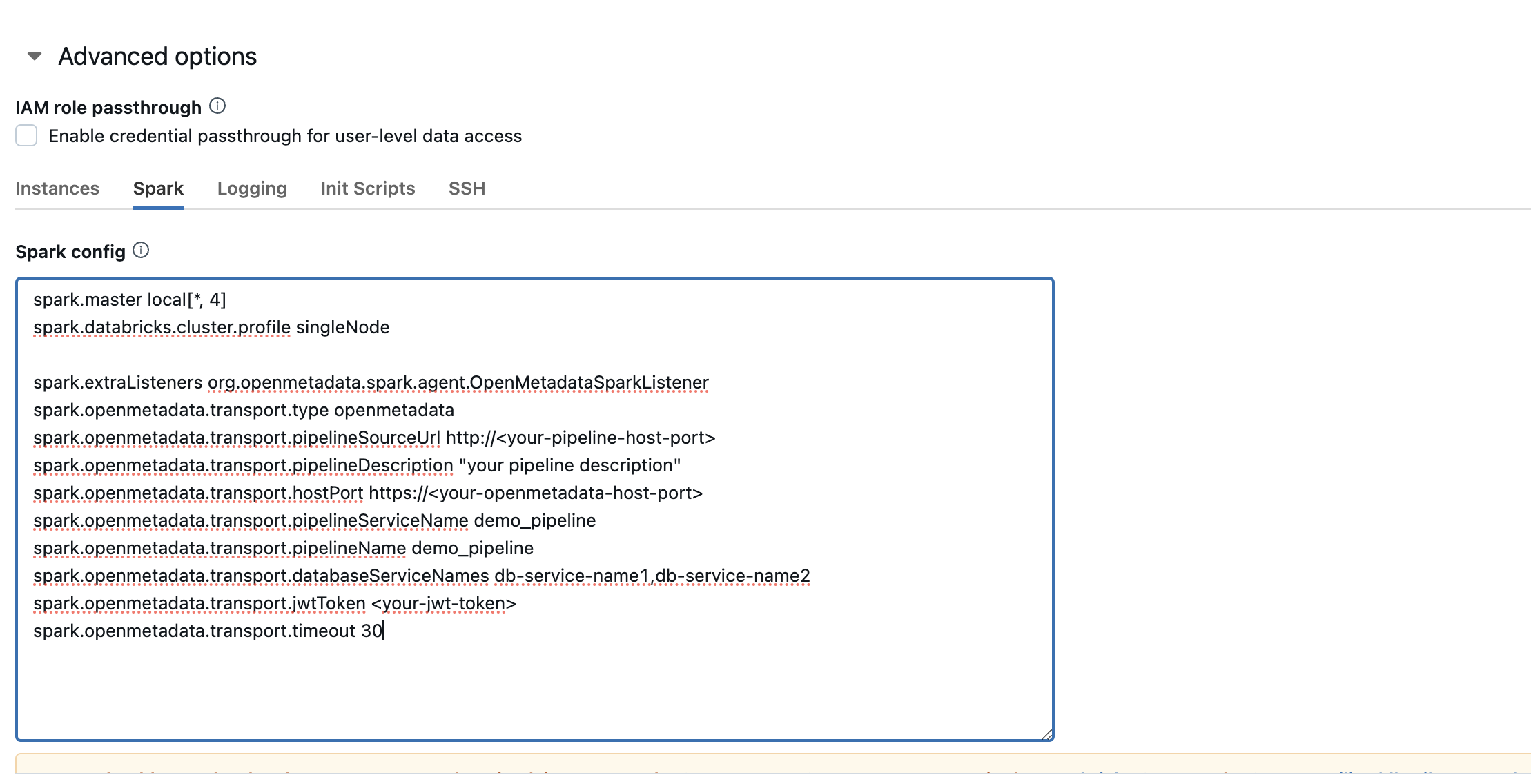

4. Configure Spark

After configuring the init script, you will need to update the spark config as well.

Spark Set Config

these are the possible configurations that you can do, please refer the Configuration section above to get the detailed information about the same.

After all these steps are completed you can start/restart your compute instance and you are ready to extract the lineage from spark to OpenMetadata.

Using Spark Agent with Glue

Follow the below steps in order to use OpenMetadata Spark Agent with glue.

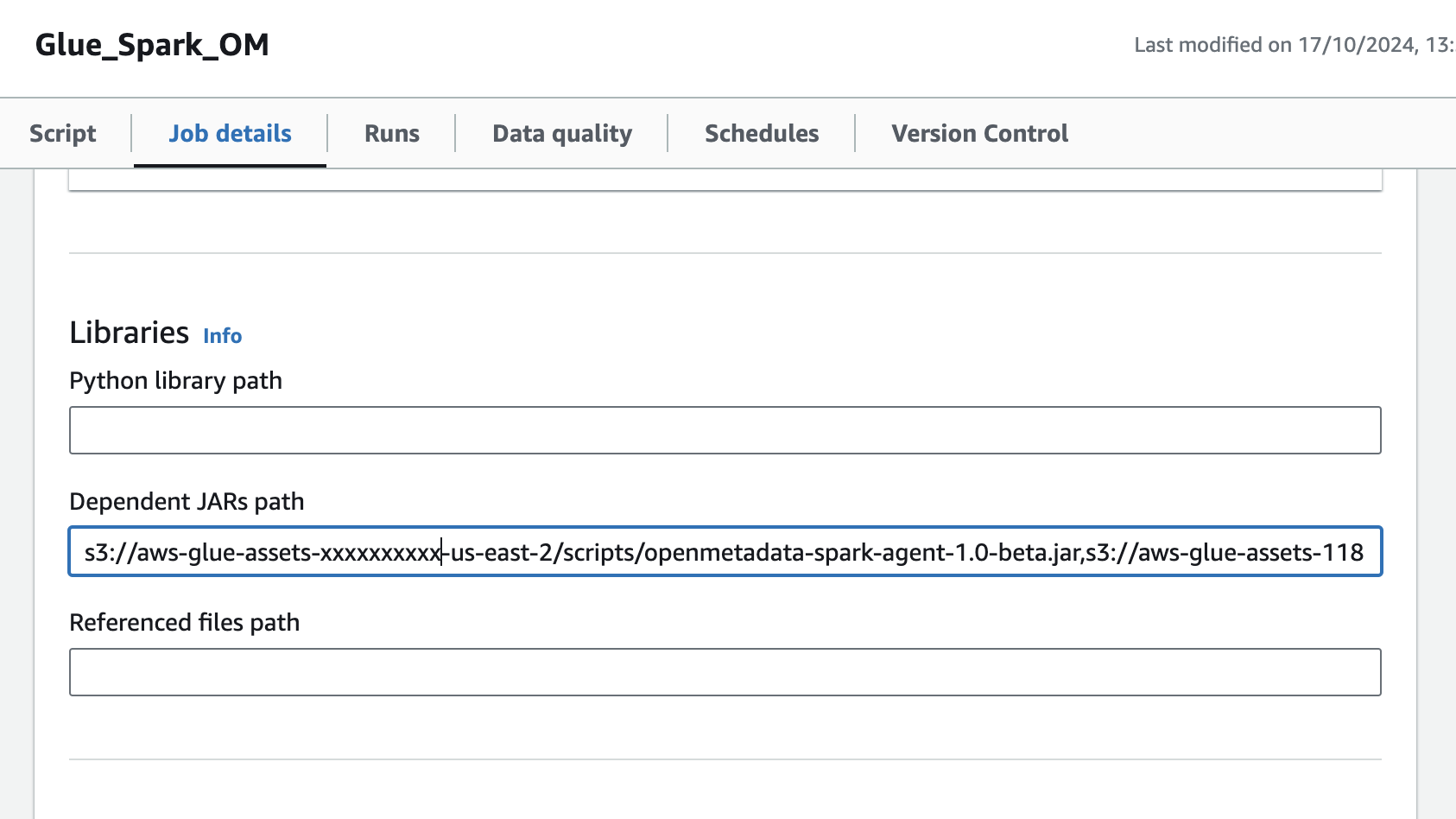

1. Specify the OpenMetadata Spark Agent JAR URL

- Upload the OpenMetadata Spark Agent Jar to S3

- Navigate to the glue job,In the Job details tab, navigate to Advanced properties → Libraries → Dependent Jars path

- Add the S3 url of OpenMetadata Spark Agent Jar in the Dependent Jars path.

Glue Job Configure Jar

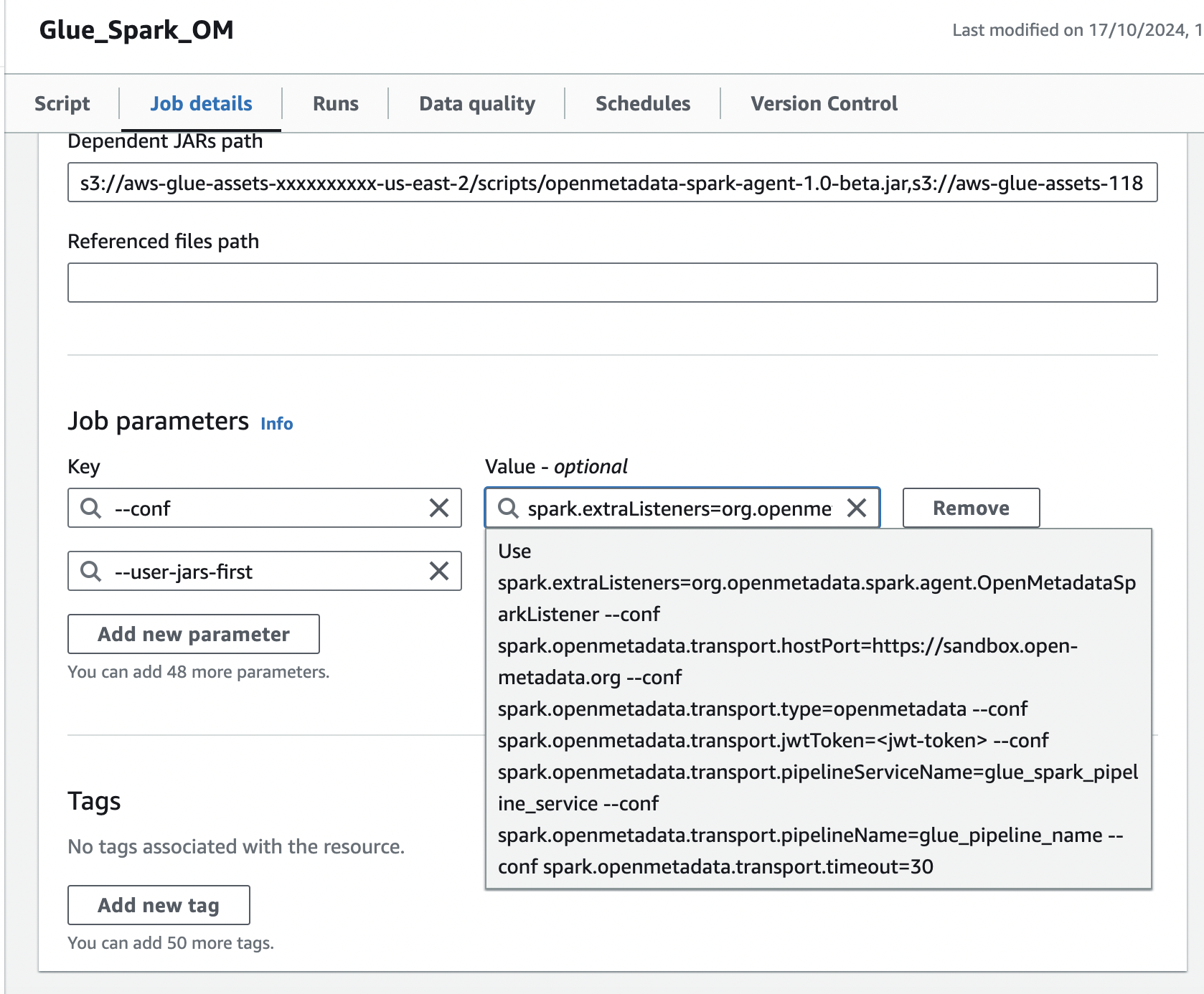

2. Add Spark configuration in Job Parameters

In the same Job details tab, add a new property under Job parameters:

- Add the

--confproperty with following value, make sure to customize this configuration as described in the above documentation.

- Add the

--user-jars-firstparameter and set its value totrue

Glue Job Configure Params